Explainable AI – In the realm of artificial intelligence and machine learning, the concept of Explainable AI (XAI) emerges as a crucial key to unlocking the mysteries within the intricate “black box.”

Traditional machine learning models, often deemed as opaque or inscrutable, have raised concerns regarding their decision-making processes. This opacity can pose challenges, especially when these models impact critical areas such as finance, healthcare, and criminal justice. The imperative for explainability becomes evident in scenarios where decisions influence human lives, such as loan approvals, medical diagnoses, or legal judgments. Understanding how and why a model reaches a specific decision fosters trust, accountability, and ethical use of AI technologies.

Complex Decision Tree Diagram for Decision Making with AILabPage Integration

Key Basics of Explainable AI (XAI)

Explainable AI (XAI) refers to the capability of artificial intelligence systems to provide clear and understandable insights into their decision-making processes. The primary goal of XAI is to demystify the “black box” nature of complex machine learning models, making their outputs and reasoning accessible to humans. This is particularly crucial in scenarios where the consequences of AI decisions impact individuals, society, or industries.

- Transparency:

- XAI emphasizes transparency in AI algorithms. It seeks to make the decision-making process of AI models understandable and interpretable for humans.

- Interpretability:

- Interpretability involves presenting AI outputs in a manner that can be easily comprehended by non-experts. This ensures that users understand why a specific decision or recommendation was made.

- Trust and Accountability:

- By providing explanations for AI decisions, XAI enhances trust in the technology. It holds AI systems accountable for their actions, fostering responsible and ethical use.

- Human-Centric Design:

- XAI promotes the development of AI systems with human-centric design principles. It recognizes the importance of aligning AI technology with human values and expectations.

- Model-Agnostic Techniques:

- XAI techniques are often model-agnostic, meaning they can be applied across various machine learning models. This flexibility enables the application of explainability to a wide range of AI systems.

- Stakeholder Involvement:

- XAI encourages the active involvement of stakeholders, including end-users, domain experts, and regulatory bodies, in the development and deployment of AI systems.

- Ethical Considerations:

- XAI addresses ethical concerns related to AI by ensuring that the decision-making processes are fair, unbiased, and aligned with societal norms.

- Application Areas:

- XAI finds applications in critical domains such as healthcare, finance, criminal justice, and autonomous systems, where clear explanations for AI decisions are crucial.

In essence, Explainable AI aims to demystify the decision-making processes of machine learning models, providing clarity and transparency into the logic behind predictions or outcomes. At its core, XAI seeks to bridge the gap between the complexity of advanced algorithms and the need for comprehensibility, accountability, and ethical considerations. Explainable AI is about making AI systems more accessible, accountable, and trustworthy, thereby facilitating their responsible integration into various aspects of our lives.

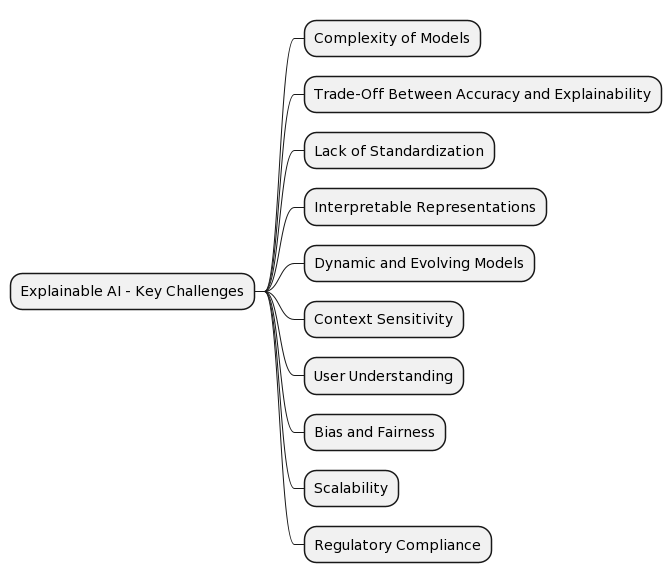

Explainable AI – Key Challenges

The level of challenges makes researchers like me and many practitioners sweat, but despite the complexity, they must be addressed to ensure the effective implementation and adoption of transparent and understandable artificial intelligence systems. One prominent challenge with traditional machine learning models lies in their inability to articulate the rationale behind predictions. This challenge is particularly pronounced in complex models like deep neural networks, often referred to as the “black box” due to their intricate, layered architectures. Some of them are as follows

- Complexity of Models:

- Modern machine learning models, especially deep neural networks, often operate as complex black boxes. Explaining the decision-making process of these intricate models is challenging due to their high dimensionality and non-linear relationships.

- Trade-Off Between Accuracy and Explainability:

- There is often a trade-off between the accuracy of a model and its explainability. Simplifying a model for better interpretability may lead to a reduction in its predictive performance, creating a dilemma for practitioners.

- Lack of Standardization:

- The field lacks standardized methods for measuring and evaluating the explainability of AI models. This absence of a common framework makes it difficult to compare and benchmark different XAI techniques.

- Interpretable Representations:

- Generating human-interpretable representations of complex features or abstract concepts learned by AI models poses a significant challenge. Transforming high-dimensional data into understandable formats remains an ongoing research area.

- Dynamic and Evolving Models:

- Many real-world applications involve dynamic and evolving data. Adapting XAI techniques to handle changes in model behavior over time, especially in dynamic environments, is a challenge.

- Context Sensitivity:

- AI systems often make decisions based on complex contextual information. Capturing and presenting this context in an interpretable manner is challenging and requires addressing the contextual nuances of different applications.

- User Understanding:

- Ensuring that explanations provided by AI models are understandable and meaningful to end-users with varying levels of technical expertise remains a challenge. Bridging the gap between technical intricacies and user comprehension is crucial.

- Bias and Fairness:

- Addressing bias and ensuring fairness in AI models is intertwined with explainability. If an AI system produces biased outcomes, explaining those decisions transparently becomes essential for accountability and ethical considerations.

- Scalability:

- Developing scalable XAI techniques that can handle large datasets and computationally intensive models is a challenge. Ensuring that explanations remain efficient as models grow in complexity is crucial for real-world applications.

- Regulatory Compliance:

- Adhering to existing and evolving regulations related to transparency and accountability in AI poses a challenge. Developing XAI methods that align with legal and ethical frameworks is essential for widespread adoption.

Overcoming these challenges is crucial for advancing the field of Explainable AI and ensuring that AI systems are not only accurate but also transparent, understandable, and trustworthy in diverse applications.

Explainable AI – Techniques

Explainable AI employs various techniques to shed light on these opaque models. One approach involves generating model-agnostic explanations, allowing users to comprehend decisions without delving into the intricacies of each algorithm.

- Interpretable Techniques:

- Techniques such as LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) offer interpretability at the individual prediction level. LIME focuses on providing local insights into black-box models, while SHAP assigns contributions to each feature, aiding in understanding model outputs.

- Inherently Interpretable Models:

- Advances in Explainable AI include the development of inherently interpretable models like decision trees and linear models. Unlike complex black-box models, these inherently interpretable models inherently provide transparency, simplifying understanding and interpretation.

- Ethical and Regulatory Compliance:

- Explainable AI goes beyond interpretability; it plays a pivotal role in meeting ethical considerations and regulatory requirements. In industries like finance and healthcare, where regulations are stringent, transparency in AI decision-making is imperative. Regulatory bodies increasingly mandate that AI systems offer clear justifications for their actions, ensuring compliance and building trust with customers and stakeholders.

As we navigate the evolving landscape of AI, Explainable AI stands as a pivotal force shaping responsible and accountable machine learning practices. It not only facilitates a deeper understanding of AI models but also fosters a future where advanced technologies coexist harmoniously with transparency, ethics, and human values. Through the lens of Explainable AI, the once enigmatic black box of machine learning begins to reveal its inner workings, empowering users to trust and embrace the potential of AI in a transparent and accountable manner.

Conclusion – Explainable AI, underscores the paramount importance of transparency in artificial intelligence systems. The deep understanding of AI’s decision-making processes, especially in critical applications, is essential for building trust and ensuring responsible deployment. As the intricacies of the machine learning black box unravel, the demand for explainability becomes integral to ethical AI practices. In critical applications like healthcare, finance, and autonomous systems, where AI decisions have tangible consequences, transparency becomes non-negotiable. By shedding light on the inner workings of AI algorithms, stakeholders can better grasp the rationale behind AI decisions, fostering accountability and minimizing potential biases.

—

Feedback & Further Questions

Besides life lessons, I do write-ups on technology, which is my profession. Do you have any burning questions about big data, AI and ML, blockchain, and FinTech, or any questions about the basics of theoretical physics, which is my passion, or about photography or Fujifilm (SLRs or lenses)? which is my avocation. Please feel free to ask your question either by leaving a comment or by sending me an email. I will do my best to quench your curiosity.

Points to Note:

It’s time to figure out when to use which “deep learning algorithm”—a tricky decision that can really only be tackled with a combination of experience and the type of problem in hand. So if you think you’ve got the right answer, take a bow and collect your credits! And don’t worry if you don’t get it right in the first attempt.

Books Referred & Other material referred

- Open Internet research, news portals and white papers reading

- Lab and hands-on experience of @AILabPage (Self-taught learners group) members.

- Self-Learning through Live Webinars, Conferences, Lectures, and Seminars, and AI Talkshows

============================ About the Author =======================

Read about Author at : About Me

Thank you all, for spending your time reading this post. Please share your opinion / comments / critics / agreements or disagreement. Remark for more details about posts, subjects and relevance please read the disclaimer.

FacebookPage ContactMe Twitter ====================================================================

2 thoughts on “Explainable AI: Decoding the Powerful Black Box of Machine Learning”